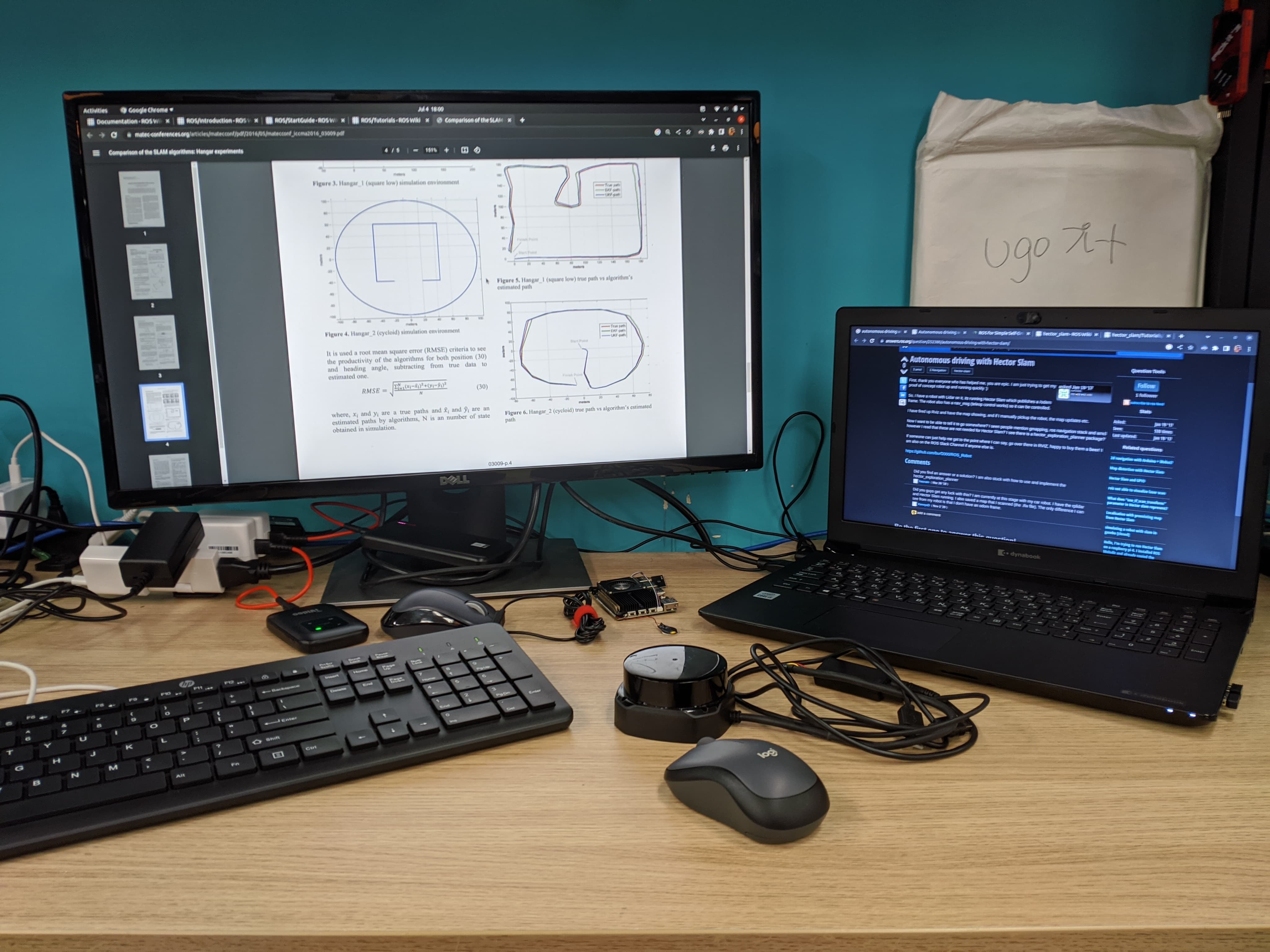

During my offline internship at Ugo Inc, I had the opportunity to work on developing and deploying advanced robotic solutions, focusing on autonomous navigation using lidar mapping. The project was divided into two main phases: mapping and autonomous navigation.

Project Overview

The goal of the project was to achieve autonomous navigation using lidar mapping. This involved creating a detailed map of the environment using a lidar sensor and then using that map to navigate the robot autonomously. The project structure was organized into various components, each serving a specific purpose.

Mapping Phase

The mapping phase was primarily focused on creating a map of the environment. Here are the key tasks and their functionalities:

- SLAM Algorithm: Used for mapping. The launch file was modified for mapping with joystick control.

- Joystick Control: Facilitates joystick control for the mapping process.

- Odometry Calculation: Receives twist messages, calculates odometry, and then publishes it.

- Data Publisher: Receives data from the server and publishes it as twist messages for odometry.

- Robot Description: Contains the URDF description of the robot.

- Lidar Sensor Launch: Responsible for launching the lidar sensor.

- Velocity to Array: Reads velocity data and converts it into an array for robot movement.

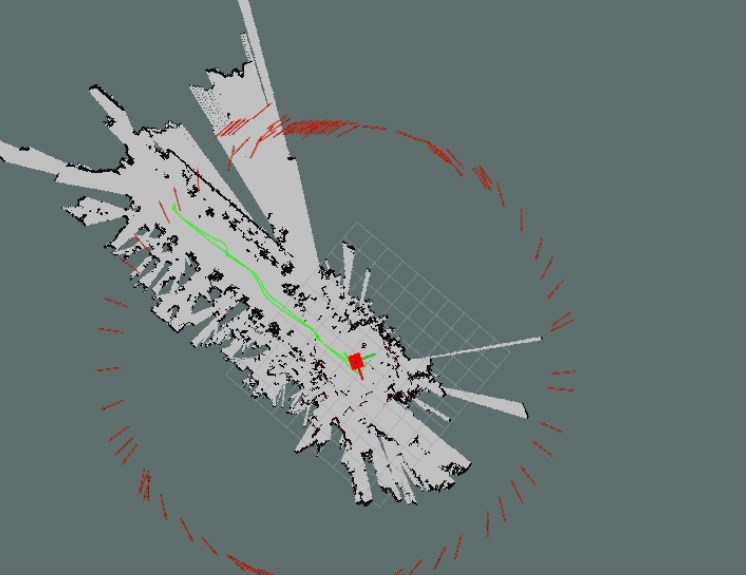

Navigation Phase

The navigation phase was dedicated to achieving autonomous navigation. Here are the key tasks and their functionalities:

- Odometry Calculation: Similar to the mapping phase, it calculates odometry from twist messages and publishes it.

- Data Publisher: Receives data from the server and publishes it as twist messages for odometry.

- Robot Description: Contains the URDF description of the robot.

- Velocity to Array: Reads velocity data and converts it into an array for robot movement.

- Navigation Stack: Consists of configurations for costmaps, a launch file for running all the nodes required for autonomous navigation, and a saved maps folder. It also contains a script to publish dynamic transformations from the odometry frame to the base footprint.

Running the Autonomous Navigation

To achieve autonomous navigation, the following steps were performed:

- Launch the navigation stack:

roslaunch navigation_stack launch-all.launch - Open Rviz to visualize the navigation components.

- Set the initial 2D pose to align the laser scan with the map boundary for an approximate initial position.

- Set a 2D point and pose as the final navigation goal.

- The robot attempts to navigate to the specified goal.

Challenges and Solutions

During the project, I faced several challenges, including:

- Sensor Calibration: Ensuring accurate sensor data was crucial. I resolved this by performing extensive calibration tests and adjusting the parameters accordingly.

- Odometry Drift: Odometry data tended to drift over time, affecting navigation accuracy. I mitigated this by implementing sensor fusion techniques to combine data from multiple sensors.

- Obstacle Avoidance: The robot had to navigate around obstacles in real-time. I addressed this by fine-tuning the parameters of the navigation stack and integrating real-time obstacle detection algorithms.

Conclusion

My offline internship at Ugo Inc was a remarkable experience that allowed me to delve deeply into autonomous navigation and lidar mapping. By working on this project, I was able to enhance my skills in robotics and gain practical experience in developing advanced robotic solutions. The hands-on approach and the challenges faced during the project were instrumental in my professional growth.